Neuromorphic Chips: Silicon Brains Poised to Revolutionize Computing

In the ever-evolving landscape of computer technology, a groundbreaking innovation is quietly reshaping the future of computing. Neuromorphic chips, inspired by the human brain's neural networks, are poised to usher in a new era of intelligent, energy-efficient computing. These silicon marvels promise to bridge the gap between artificial and biological intelligence, potentially transforming everything from smartphones to autonomous vehicles.

The journey from concept to reality has been long and challenging. Early attempts at neuromorphic computing were limited by the available technology and understanding of neural networks. However, recent advancements in materials science, neuroscience, and machine learning have breathed new life into this field, propelling neuromorphic chips from theoretical curiosity to practical application.

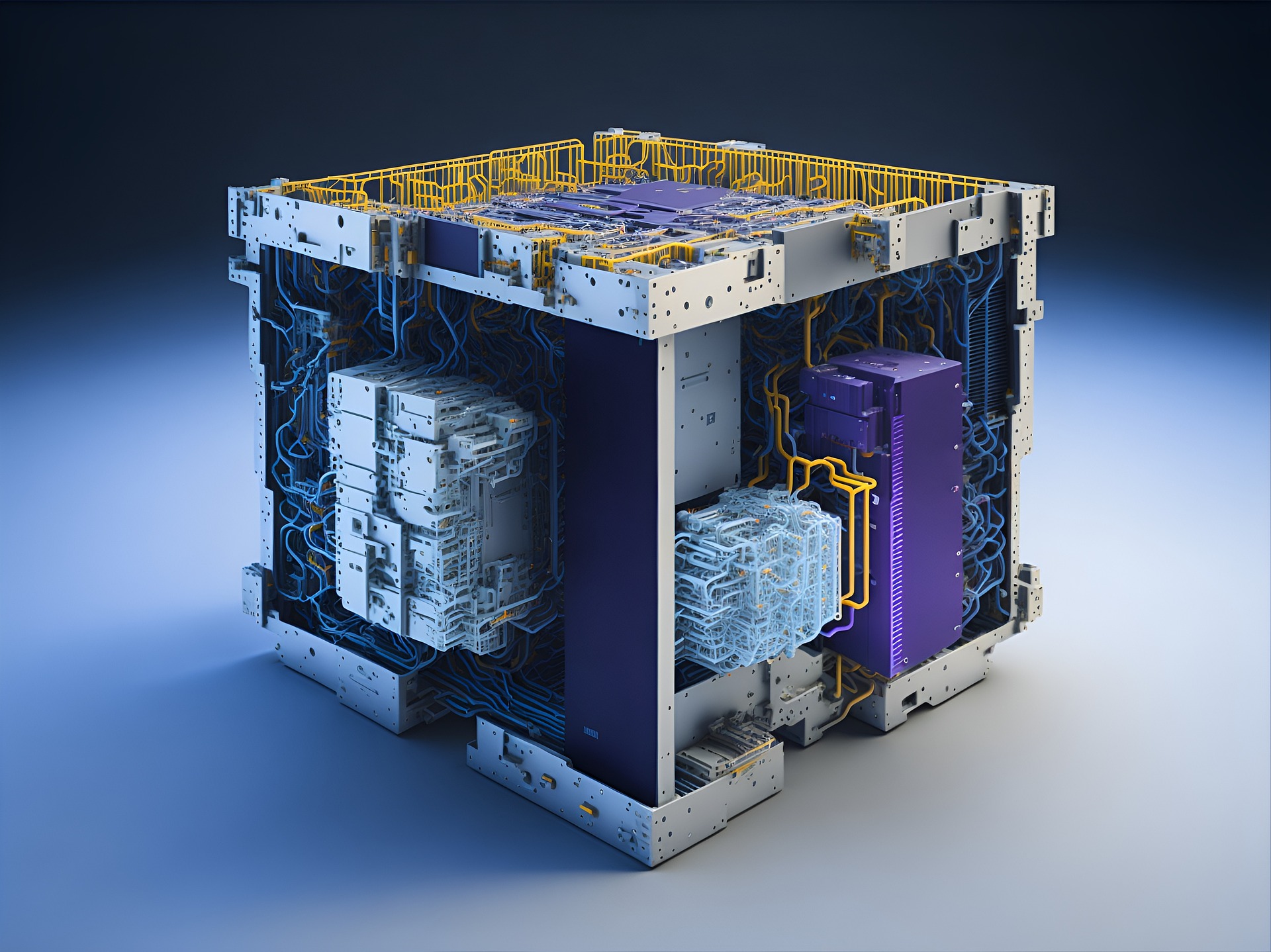

Anatomy of a Silicon Neuron

At the heart of neuromorphic chips lie artificial neurons and synapses, designed to emulate their biological counterparts. These silicon neurons communicate through spikes, mimicking the way real neurons transmit information. The synapses connecting these artificial neurons can strengthen or weaken over time, replicating the brain’s plasticity and learning capabilities.

One of the most exciting aspects of neuromorphic chips is their ability to operate on incredibly low power. While traditional processors consume energy continuously, neuromorphic chips only use power when their neurons fire, mirroring the brain’s energy efficiency. This could lead to devices that not only think more like humans but also consume far less power.

From Lab to Market: Current Applications and Future Potential

Neuromorphic chips are no longer confined to research labs. Companies like Intel, IBM, and BrainChip are already bringing this technology to market. Intel’s Loihi chip, for instance, can solve certain problems up to 1,000 times faster than traditional processors while using significantly less power.

The potential applications of neuromorphic computing are vast and varied. In robotics, these chips could enable more natural and adaptive movements. For autonomous vehicles, they could provide faster, more efficient decision-making capabilities. In the realm of IoT devices, neuromorphic chips could allow for sophisticated on-device AI without the need for cloud connectivity.

Perhaps most intriguingly, neuromorphic computing could revolutionize our understanding of the human brain itself. By creating silicon models of neural networks, researchers can gain new insights into how our brains process information, learn, and adapt.

Challenges on the Horizon

Despite the promise of neuromorphic computing, significant challenges remain. Scaling up these chips to match the complexity of the human brain — with its roughly 86 billion neurons and 100 trillion synapses — is a daunting task. Moreover, programming these chips requires a paradigm shift from traditional coding methods, necessitating new tools and approaches.

Another hurdle is the current limitation in the variety of tasks neuromorphic chips can perform efficiently. While they excel at certain types of computations, particularly those involving pattern recognition and sensory processing, they’re not yet suitable for all computing tasks.

The Road Ahead: Integrating Silicon and Wetware

As neuromorphic technology matures, we may see a convergence of silicon-based computing and biological systems. Researchers are already exploring ways to interface neuromorphic chips with living neurons, opening up possibilities for advanced neuroprosthetics and brain-computer interfaces.

The future of neuromorphic computing is both exciting and uncertain. As these chips become more sophisticated and widely adopted, they could fundamentally change our relationship with technology. Devices that can learn, adapt, and think more like humans could lead to more intuitive and personalized user experiences.

In the coming years, we can expect to see neuromorphic chips making their way into more consumer devices, scientific instruments, and industrial applications. While they may not replace traditional computing entirely, they represent a significant step towards more brain-like artificial intelligence.

As we stand on the brink of this neuromorphic revolution, one thing is clear: the line between silicon and wetware is blurring, and the future of computing looks increasingly inspired by the very organ that conceived it — the human brain.